Hand-Eye Calibration Problem

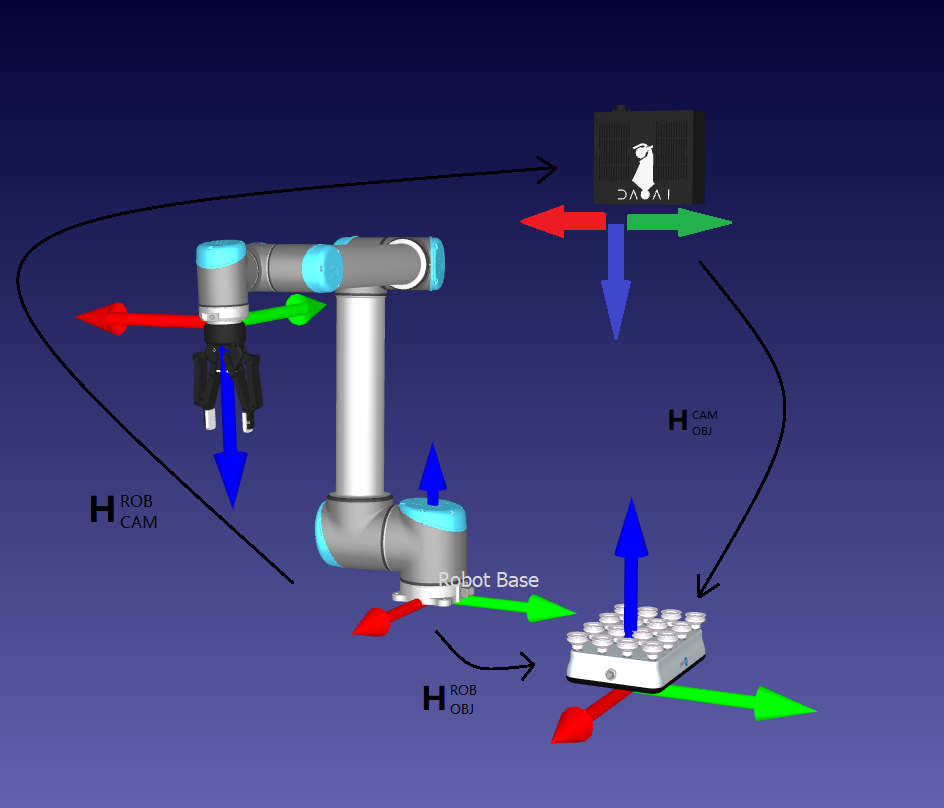

This tutorial aims to describe the problem that the hand-eye calibration solves as well as to introduce robot poses and coordinate systems that are required for the hand-eye calibration. The problem is the same for eye-to-hand systems and eye-in-hand systems. Therefore, we first provide a detailed description for the eye-to-hand configuration. Then, we point out the differences for the eye-in-hand configuration.

Eye-to-hand

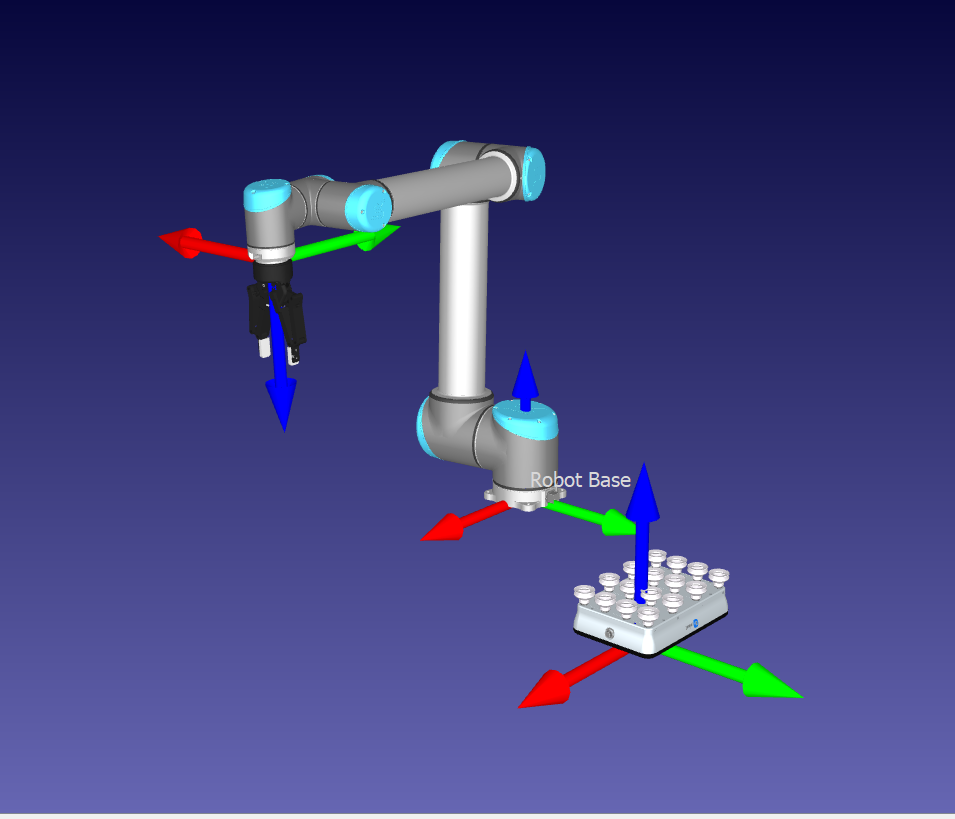

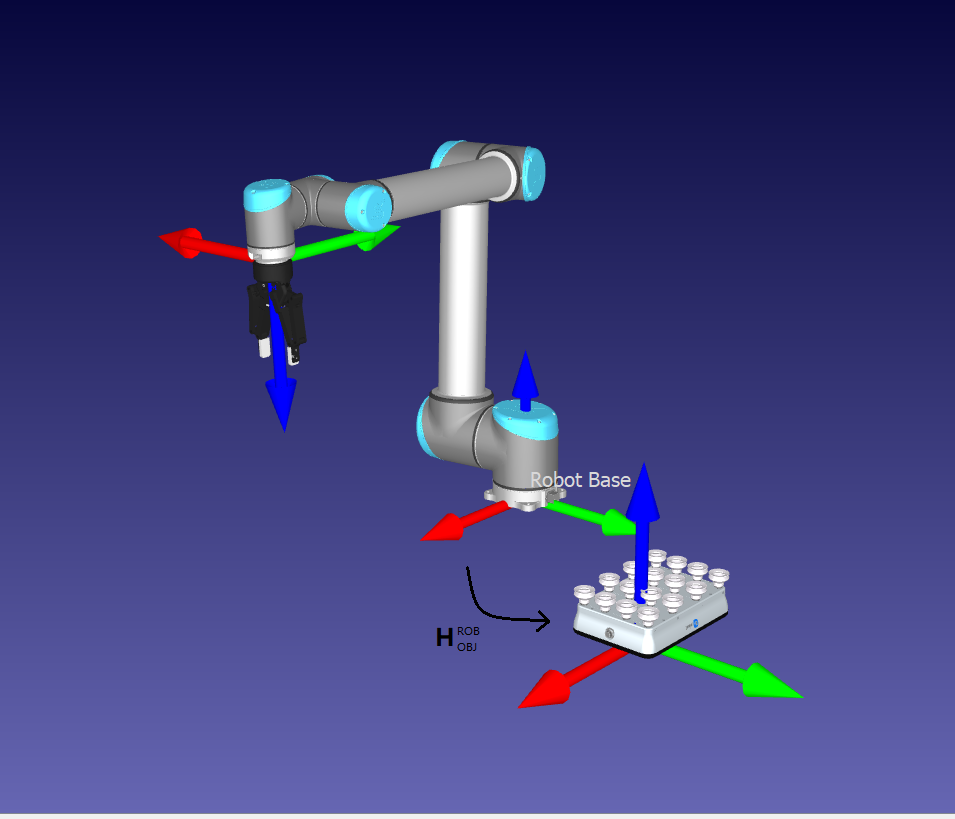

How can a robot pick an object?

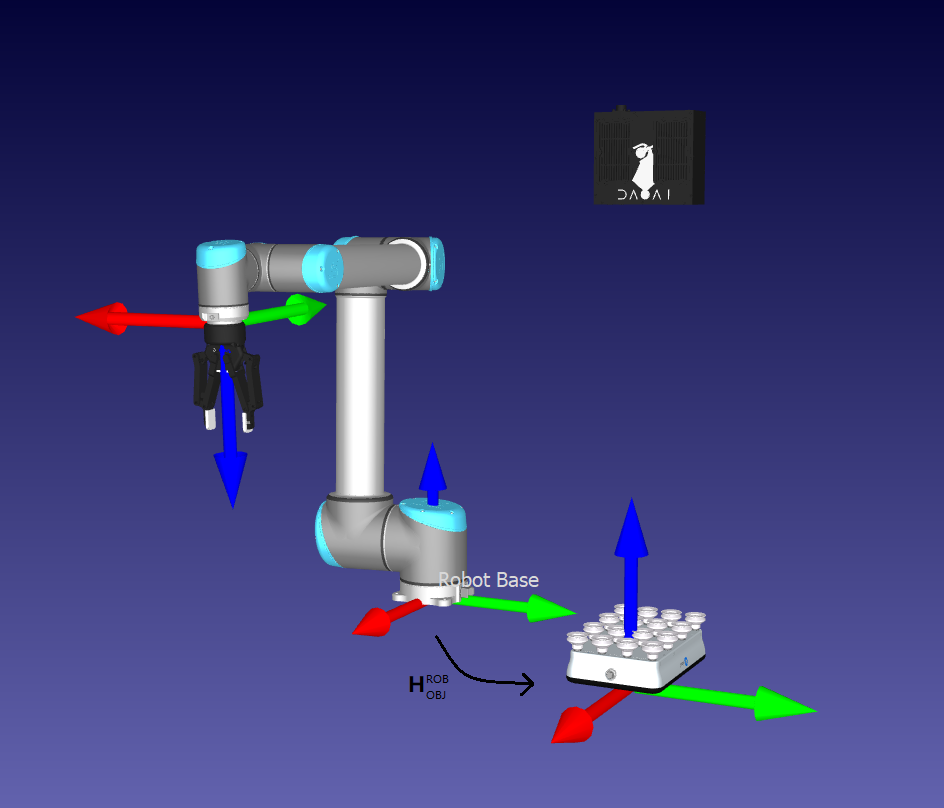

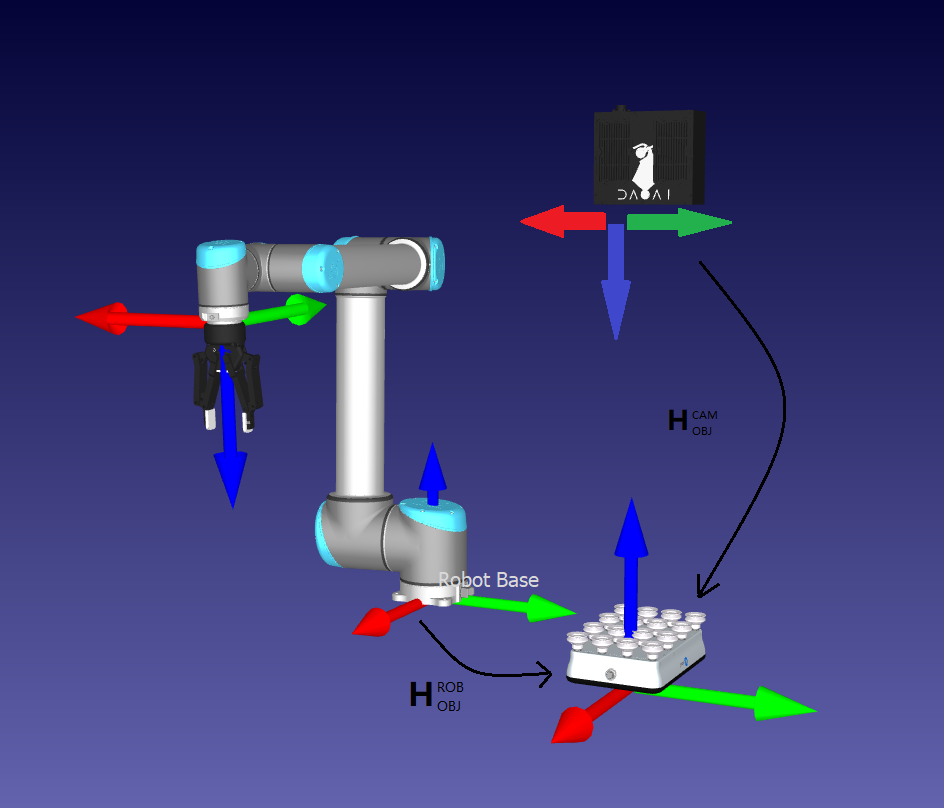

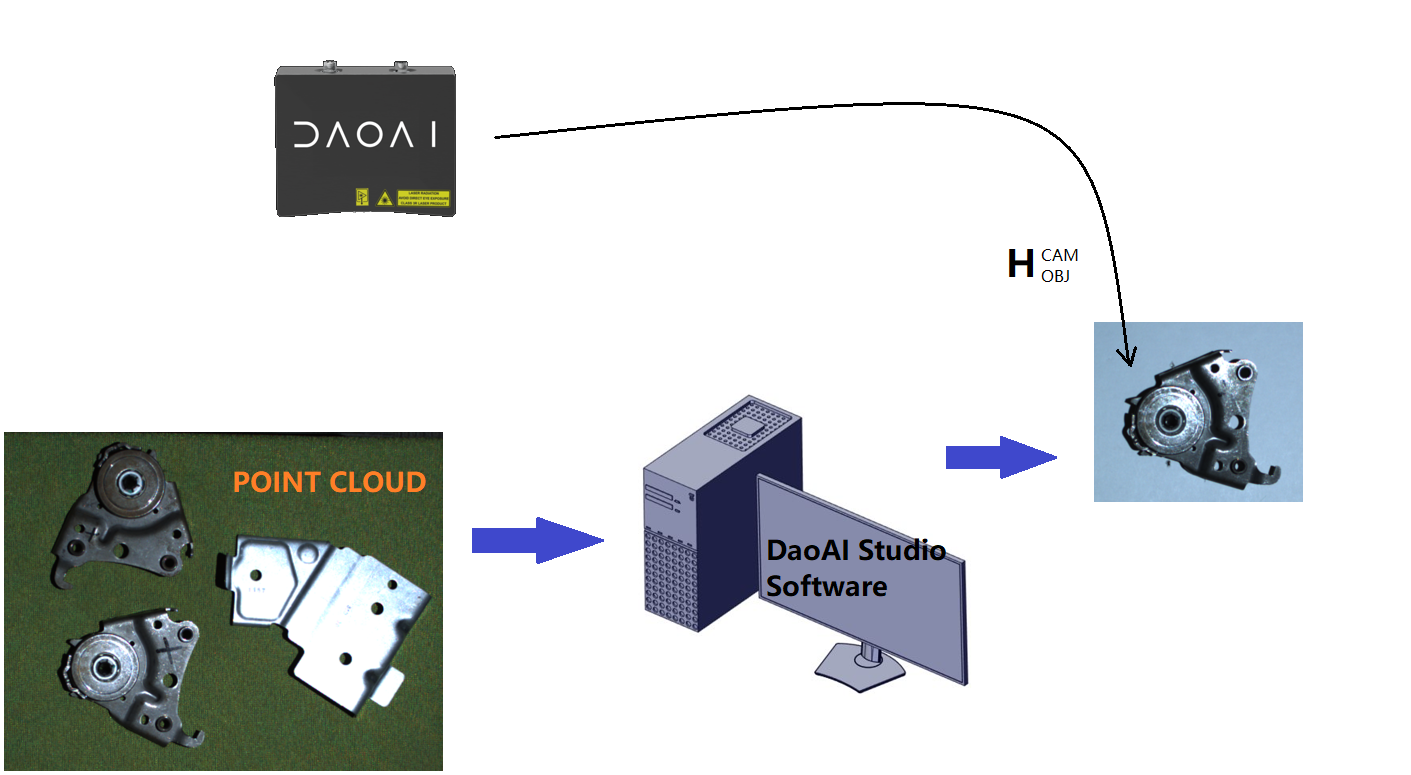

DaoAI point clouds are given relative to the DaoAI camera’s coordinate system. The origin in this coordinate system is fixed at the middle of the DaoAI imager lens (internal 2D camera). A machine vision software can run detection and localization algorithms on this collection of data points. It can determine the pose of the object in DaoAI camera’s coordinate system ().

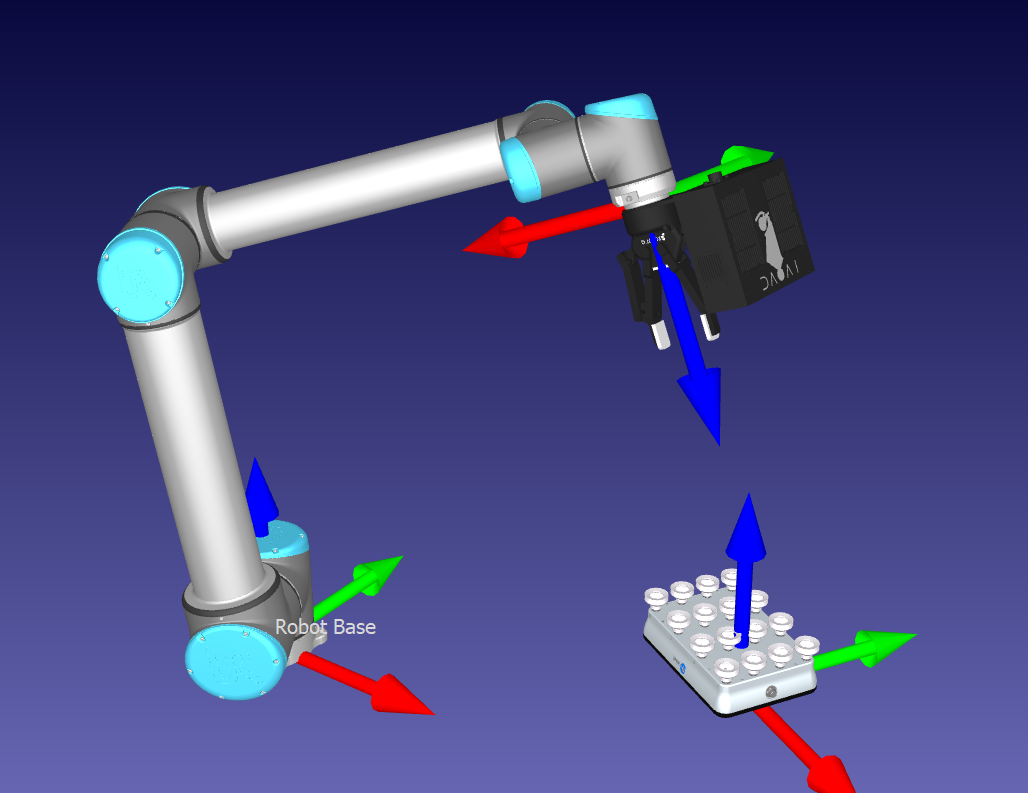

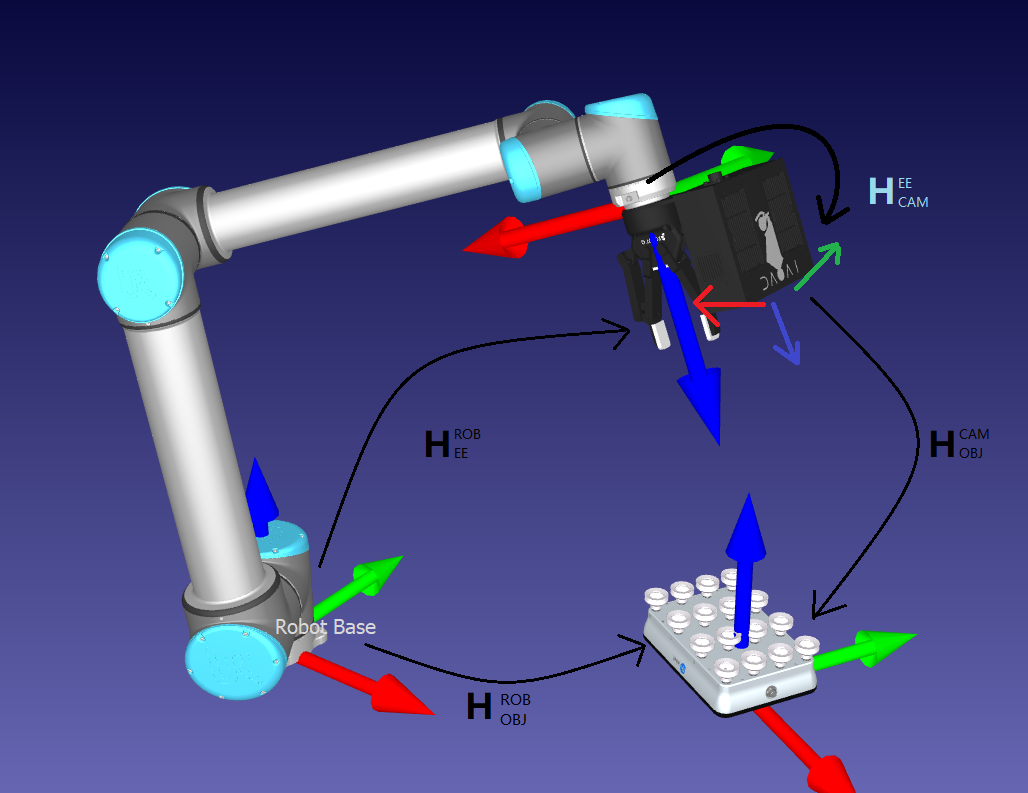

Eye-in-hand

How can a robot pick an object?

Now that we’ve defined the hand-eye calibration problem, let’s see Hand-Eye Calibration Solution.